New Trends Make 10 Gigabit Ethernet the Data-Center Performance Choice

WHITE PAPER | New Trends Make 10 Gigabit Ethernet the Data-Center Performance Choice

6

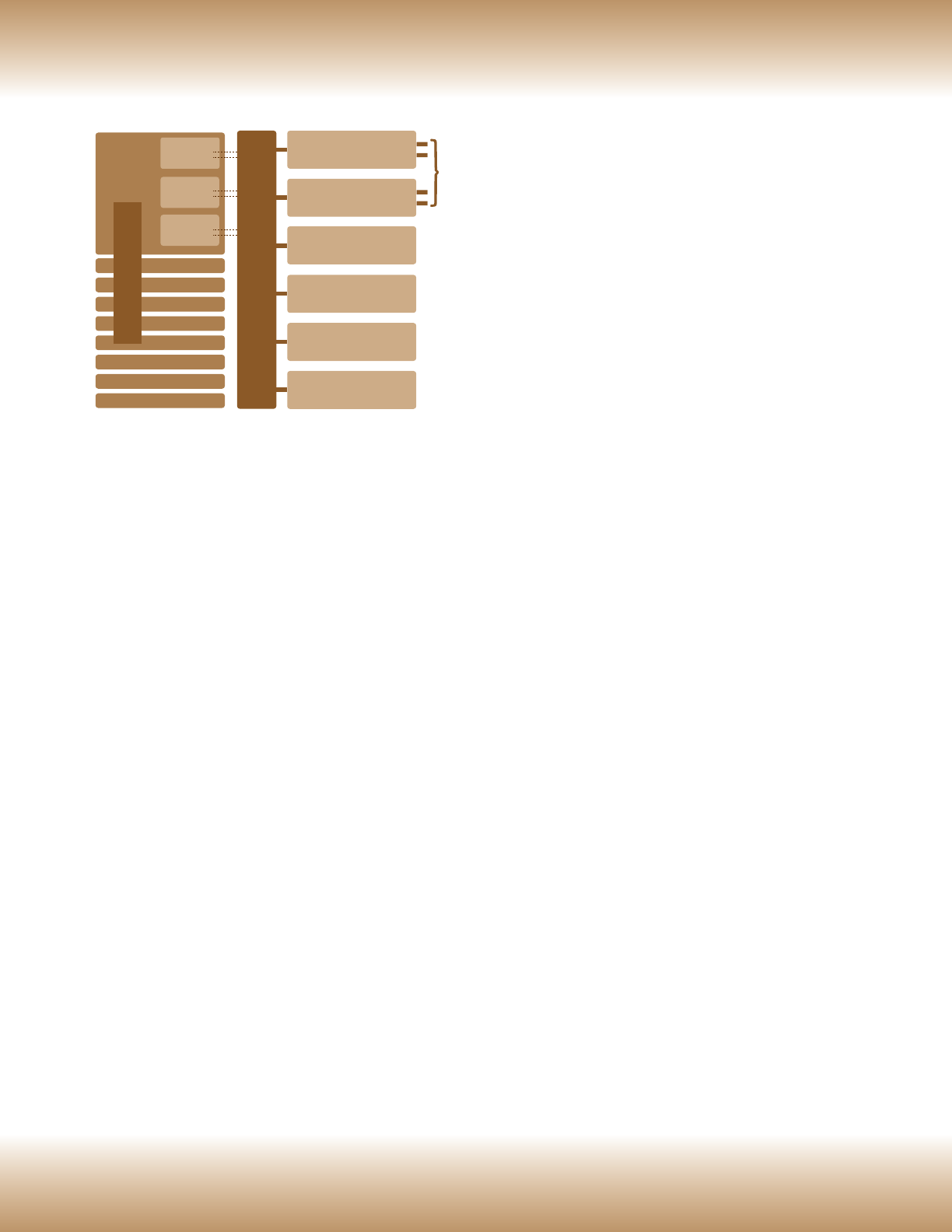

82598 10 Gigabit Ethernet Controller. When used

as LAN on motherboard (LOM) for blades, the

integrated MAC and XAUI ports allow direct 10GbE

connectivity to the blade system mid-plane without

use of an expensive Physical (PHY) layer device. As a

result, PHY devices can be pushed out of the blades

and consolidated at the switch ports, as shown

in Figure 3. Since PHY devices, especially for fiber

connectivity, constitute as much or more than half

the cost of NICs, the switch-level PHY consolidation

and sharing indicated in Figure 3 results in

significant reductions in 10GbE cost per port

for blade systems. Such significant performance

increases and cost reductions, especially with the

advent of 10GbE in twisted pair, will promote

10GbE connectivity throughout the data center.

THE EMERGENCE OF STORAGE

OVER ETHERNET

So far, discussion has focused primarily on compute

platform and I/O performance as driving the need

for 10GbE connectivity. Storage is another related

area that can benefit from the bandwidth benefits

and falling prices of 10GbE.

Within the network and data center, there are

three traditional types of storage. These are direct-

attached storage (DAS), network-attached storage

(NAS), and the storage-area network (SAN). Each has

its distinct differences and advantages; however, SAN

is emerging as being the most advantageous interms

of scalability and flexibility for use in data centers

and high-density computing applications.

The main drawback to SAN implementation in

the past has been equipment expense and the

specially trained staff necessary for installing and

maintaining the Fibre Channel (FC) fabric used

for SANs. Nonetheless, there has been sufficient

demand for the storage benefits of SANs for Fibre

Channel to become well established in that niche

by virtue of its high bandwidth.

Although 10GbE has been around for several years,

it is now poised to take a position as an alternative

fabric for SAN applications. This was made possible by

the Internet Small Computer System Interface (iSCSI)

standard. The iSCSI standard is an extension of the

SCSI protocol for block transfers used in most storage

devices and also used by Fibre Channel. The Internet

extension defines protocols for extending block

transfer protocol over IP, allowing standard Ethernet

infrastructure elements to be used as a SAN fabric.

Basic iSCSI capability is implemented through

native iSCSI initiators provided in most OSs today.

This allows any Ethernet NIC to be used as a SAN

interface device. However, lack of a remote-boot

capability left such implementations lacking in the

full capabilities provided by Fibre Channel fabrics.

Initially, iSCSI host bus adapters (HBAs) offered a

solution, but they were expensive specialty items

much like Fibre Channel adapters.

To resolve the remote-boot issue, Intel provides

iSCSI Remote Boot support with all Intel PCIe server

adapters, including the new generation of Intel

10 Gigabit Ethernet Server Adapters. This allows

use of the greater bandwidth of 10GbE in new

SAN implementations. Additionally, Ethernet and

Fibre Channel can coexist on the same network.

Figure 3. Typical blade system architecture. Recent

advances have moved 10GbE connectivity to the blade

level and reduced per-port costs by consolidating

expensive PHY-level devices at the switch port.

10GbE Switch

PH

Y

Fiber Channel Switch

Power Supply

Power Supply

Blade Mgmt

Blade Mgmt

Compute Blades

10GbE

Fiber Ch.

Mgmt

Mid-plane