New Trends Make 10 Gigabit Ethernet the Data-Center Performance Choice

WHITE PAPER | New Trends Make 10 Gigabit Ethernet the Data-Center Performance Choice

5

for sharing ports and switching data between

multiple VMs. In brief, VMDq provides acceleration

with multiple queues that sort and group packets

for greater server and VM I/O efficiency.

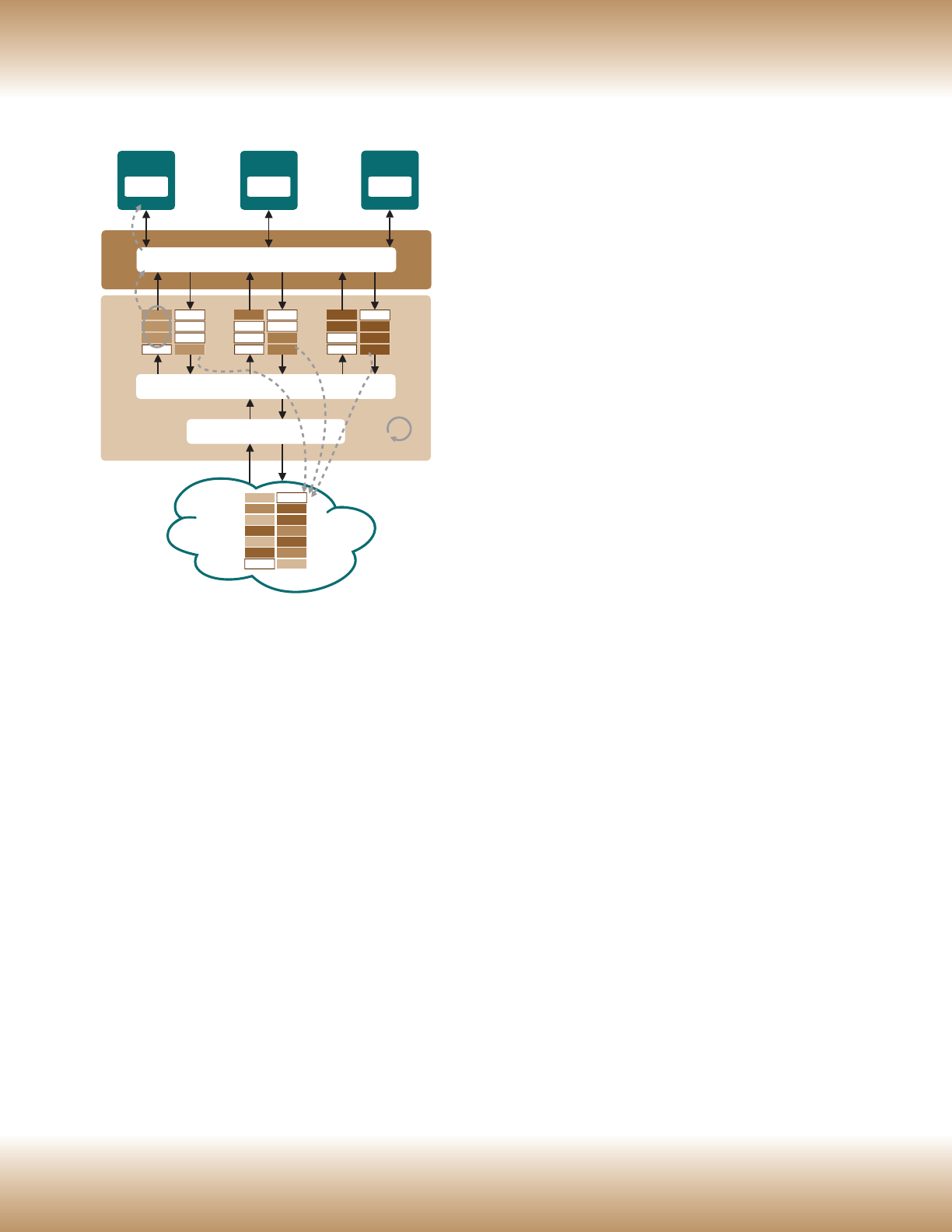

Figure 2 illustrates the key elements of VMDq.

The virtualized server is at the top of the

diagram and has several VMs with an intervening

virtualization software layer that includes a Layer

2 software switch. The server adapter with VMDq

provides the connection between the virtualized

server and the LAN.

On the receive side, VMDq sorts I/O packets into

queues (Rx1, Rx2,… Rxn) for the destination VMs

and sends them in groups to the Layer 2 software

switch. This reduces the number of decisions and

data copies required of the software switch.

VM1

vNIC

VM2

vNIC

VMn

vNIC

Layer 2 Software Switch

MAC/PHY

Adapter

with VMDq

Virtualized Server

Rx1

Rx1

Rx1

Rx2

Tx2

Tx2

Rxn

Rxn

Tx1

Tx3

Tx3

Tx3

LAN

Rx1

Rx2

Rx1

Rxn

Rx1

Rxn

Idle

Idle

Txn

Txn

Tx2

Txn

Tx2

Tx1

Layer 2 Sorter

......

Figure 2. VMDq and Intel

®

10 Gigabit Ethernet Server

Adapters. VMDq provides more efficient I/O in

virtualized servers.

On the transmit side (Tx1, Tx2,... Txn), VMDq

provides round-robin servicing of the transmit

queue. This ensures transmit fairness and prevents

head-of-line blocking. The overall result for both

the receive-side and transmit-side enhancements

is a reduction in CPU usage and better I/O

performance in a virtualized environment.

HIGH-DENSITY COMPUTING

ENVIRONMENTS

The performance multiples offered by multi-core

processors—along with their lower energy and

cooling requirements—make them an increasingly

popular choice for use in high-density computing

environments. Such environments include high-

performance computing (HPC), grid computing,

and blade computer systems. There is also a newly

emerging breed referred to as dense computing,

which consists of a rack-mounted platform with

multiple motherboards sharing resources.

While their architectures differ, high-density

computing environments have many commonalities.

They are almost always power, cooling, and space

critical, which is why multi-core processors are taking

over in this environment. Additionally, their I/O

requirements are quite high today. This is typified by

the blade system shown in Figure 3.

Typical blade systems in the past used Fibre Channel*

for storage connectivity and GbE for network

connectivity. However, blade systems today are

moving to Quad-Core Intel Xeon processor-

based blades with 10GbE connectivity, as shown

in Figure 3. This is supported by the emergence

of universal or virtual fabrics and the migration

of 10GbE from the blade chassis to the blade

architecture. Moreover, instead of costing thousands

of dollars per port, 10GbE in blades is now on the

order of a few hundred dollars per port.

A key reason for the cost reduction of 10GbE in

blades is the fully integrated 10GbE Media Access

Control (MAC) and XAUI ports

3

on the new Intel

®