Datasheet

QLogic QLE7340 Single-Port 40 Gbps QDR InfiniBand Host Channel Adapte

r

2

Part number information

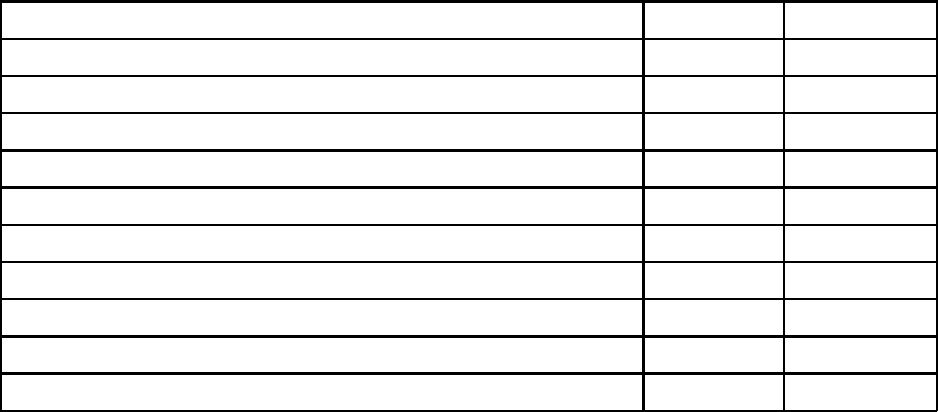

Table 1. Ordering part numbers and feature codes

Description Part number Feature code

QLogic QLE7340 Single-Port 40 Gbps QDR InfiniBand HCA 59Y1888 5763

0.5 m QLogic Copper QDR InfiniBand QSFP 30AWG Cable 59Y1892 3725

1 m QLogic Copper QDR InfiniBand QSFP 30AWG Cable 59Y1896 3726

3 m QLogic Copper QDR InfiniBand QSFP 28AWG Cable 59Y1900 3727

3 m QLogic Optical QDR InfiniBand QSFP Cable 59Y1920 3731

10 m QLogic Optical QDR InfiniBand QSFP Cable 59Y1924 3732

30 m QLogic Optical QDR InfiniBand QSFP Cable 59Y1928 3733

3m IBM Optical QDR InfiniBand QSFP Cable 49Y0488 5989

10m IBM Optical QDR InfiniBand QSFP Cable 49Y0491 5990

30m IBM Optical QDR InfiniBand QSFP Cable 49Y0494 5991

Features and benefits

The QLogic QLE7340 HCA has the following summary of features and specifications:

40 Gbps InfiniBand interface (40/20/10 Gbps auto-negotiation)

3400 MBps unidirectional throughput

Approximately 30 million messages processed per second (non-coalesced)

Approximately 1.0 microsecond latency that remains low as the fabric is scaled

Multiple virtual lanes (VLs) for unique quality of service (QoS) levels per lane over the same physical

port

TrueScale architecture, with MSI-X interrupt handling, optimized for multi-core compute nodes

Operates without external memory

Optional data scrambling in InfiniBand link

Complies with InfiniBand Trade Association 1.2 standard

Supports OpenFabrics Alliance (OFED) software distributions, WinOF support planned for 1Q/2010

Key benefits of the QLogic QLE7340 HCA are:

High performance: Quad Data Rate (QDR) InfiniBand (IB) delivers 40 Gbps per port (4x10 Gbps),

providing the necessary bandwidth for high-throughput applications. With one of the highest message

rates and lowest latencies of any IB adapter, the QLE7340 provides superior HPC application

performance.

Superior scalability: QLogic's TrueScale architecture is designed to deliver near linear scalability. As

additional compute resources are added to a cluster, latency remains low and the message rate

scales with the size of the fabric, resulting in maximum utilization of compute resources.