HP-MPI Version 2.3.1 for Linux Release Note

Table Of Contents

- HP-MPI V2.3.1 for Linux Release Note

- Table of Contents

- 1 Information About This Release

- 2 New or Changed Features in V2.3.1

- 3 New or Changed Features in V2.3

- 3.1 Options Supported Only on HP Hardware

- 3.2 System Check

- 3.3 Default Message Size Changed For -ndd

- 3.4 MPICH2 Compatibility

- 3.5 Support for Large Messages

- 3.6 Redundant License Servers

- 3.7 License Release/Regain on Suspend/Resume

- 3.8 Expanded Functionality for -ha

- 3.8.1 Support for High Availability on InfiniBand Verbs

- 3.8.2 Highly Available Infrastructure (-ha:infra)

- 3.8.3 Using MPI_Comm_connect and MPI_Comm_accept

- 3.8.4 Using MPI_Comm_disconnect

- 3.8.5 Instrumentation and High Availability Mode

- 3.8.6 Failure Recover (-ha:recover)

- 3.8.7 Network High Availability (-ha:net)

- 3.8.8 Failure Detection (-ha:detect)

- 3.8.9 Clarification of the Functionality of Completion Routines in High Availability Mode

- 3.9 Enhanced InfiniBand Support for Dynamic Processes

- 3.10 Singleton Launching

- 3.11 Using the -stdio=files Option

- 3.12 Using the -stdio=none Option

- 3.13 Expanded Lightweight Instrumentation

- 3.14 The api option to MPI_INSTR

- 3.15 New mpirun option -xrc

- 4 Known Issues and Workarounds

- 4.1 Running on iWarp Hardware

- 4.2 Running with Chelsio uDAPL

- 4.3 Mapping Ranks to a CPU

- 4.4 OFED Firmware

- 4.5 Spawn on Remote Nodes

- 4.6 Default Interconnect for -ha Option

- 4.7 Linking Without Compiler Wrappers

- 4.8 Locating the Instrumentation Output File

- 4.9 Using the ScaLAPACK Library

- 4.10 Increasing Shared Memory Segment Size

- 4.11 Using MPI_FLUSH_FCACHE

- 4.12 Using MPI_REMSH

- 4.13 Increasing Pinned Memory

- 4.14 Disabling Fork Safety

- 4.15 Using Fork with OFED

- 4.16 Memory Pinning with OFED 1.2

- 4.17 Upgrading to OFED 1.2

- 4.18 Increasing the nofile Limit

- 4.19 Using appfiles on HP XC Quadrics

- 4.20 Using MPI_Bcast on Quadrics

- 4.21 MPI_Issend Call Limitation on Myrinet MX

- 4.22 Terminating Shells

- 4.23 Disabling Interval Timer Conflicts

- 4.24 libpthread Dependency

- 4.25 Fortran Calls Wrappers

- 4.26 Bindings for C++ and Fortran 90

- 4.27 Using HP Caliper

- 4.28 Using -tv

- 4.29 Extended Collectives with Lightweight Instrumentation

- 4.30 Using -ha with Diagnostic Library

- 4.31 Using MPICH with Diagnostic Library

- 4.32 Using -ha with MPICH

- 4.33 Using MPI-2 with Diagnostic Library

- 4.34 Quadrics Memory Leak

- 5 Installation Information

- 6 Licensing Information

- 7 Additional Product Information

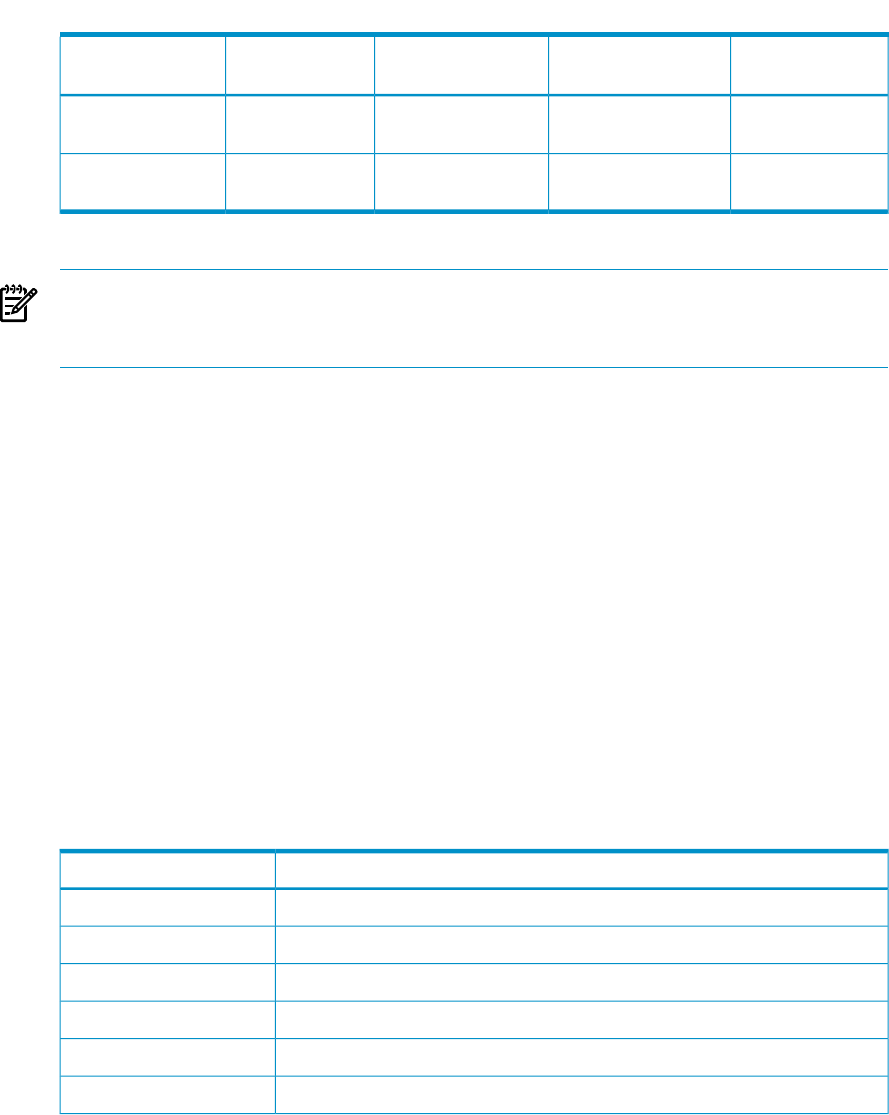

Table 1-2 Interconnect Support for HP-MPI V2.3.1 (continued)

Driver VersionNIC VersionSupported

Architectures

OptionProtocol

Elan4Rev 01Itanium, i386,

x86_64

-ELAN

Quadrics Elan

Ethernet Driver,

IP

All cards that support

IP

Itanium, i386,

x86_64

-TCP

TCP/IP

Contact your interconnect provider to verify driver support.

NOTE: Support for the Mellanox VAPI protocol has been discontinued in HP-MPI.

However, VAPI functionality has not been removed. VAPI support for HP-MPI releases

V2.2.5.1 and earlier is still available.

1.2.3 Compilers

HP-MPI strives to be compiler neutral. HP-MPI V2.3.1 for Linux is supported with the

following compilers:

• GNU 3.2, 3.4, 4.1

• glibc 2.3, 2.4, 2.5

• Intel 9.0, 9.1, 10.0, 10.1

• PathScale 2.3, 2.4, 2.5, 3.1, 3.2

• Portland Group 6.2, 7.0, 7.1, 7.2

1.2.4 Directory Structure

All HP-MPI (for Linux) files are stored in the /opt/hpmpi directory. Table 1-3 describes

the directory structure.

If you choose to move the HP-MPI installation directory from its default location in

/opt/hpmpi, set the MPI_ROOT environment variable to point to the new location.

Table 1-3 Directory Structure

ContentsSubdirectory

Command files for the HP-MPI utilities, gather_info scriptbin

Internal HP-MPI utilitiessbin

Source files for the example programshelp

Header filesinclude

HP-MPI Linux 32-bit librarieslib/linux_ia32

HP-MPI Linux 64-bit libraries for Itaniumlib/linux_ia64

1.2 HP-MPI Product Information 9