HP Serviceguard Enterprise Cluster Master Toolkit User Guide (5900-2145, April 2013)

Table Of Contents

- HP Serviceguard Enterprise Cluster Master Toolkit User Guide

- Contents

- 1 Introduction

- 2 Using the Oracle Toolkit in an HP Serviceguard Cluster

- Overview

- Supported Versions

- Support for Oracle Database Without ASM

- Supporting Oracle ASM Instance and Oracle Database with ASM

- What is Automatic Storage Management (ASM)?

- Why ASM over LVM?

- Configuring LVM Volume Groups for ASM Disk Groups

- Sample command sequence for configuring LVM Volume Groups

- Serviceguard support for ASM on HP-UX 11i v3 onwards

- Framework for ASM support with Serviceguard

- Installing, Configuring, and Troubleshooting

- Setting up DB instance and ASM instance

- Setting up the Toolkit

- ASM Package Configuration Example

- Modifying a Legacy Database Package Using an Older Version of Oracle ECMT Scripts to use the Scripts Provided for ASM Support

- Adding the Package to the Cluster

- Node-specific Configuration

- Error Handling

- Network Configuration

- Database Maintenance

- Configuring and packaging Oracle single-instance database to co-exist with SGeRAC packages

- Configuring Oracle single-instance database that uses ASM in a Coexistence Environment

- Attributes newly added to ECMT Oracle toolkit

- Configuring a modular failover package for an Oracle database using ASM in a coexistence environment

- Configuring a legacy failover package for an Oracle database using ASM in a Coexistence Environment

- ECMT Oracle Toolkit Maintenance Mode

- Supporting EBS database Tier

- Oracle ASM Support for EBS DB Tier

- 3 Using the Sybase ASE Toolkit in a Serviceguard Cluster on HP-UX

- Overview

- Sybase Information

- Setting up the Application

- Setting up the Toolkit

- Sybase Package Configuration Example

- Creating the Serviceguard package using Modular method

- Adding the Package to the Cluster

- Node-specific Configuration

- Error-Handling

- Network configuration

- Database Maintenance

- Cluster Verification for Sybase ASE Toolkit

- 4 Using the DB2 Database Toolkit in a Serviceguard Cluster in HP-UX

- 5 Using MySQL Toolkit in a HP Serviceguard Cluster

- MySQL Package Configuration Overview

- Setting Up the Database Server Application

- Setting up MySQL with the Toolkit

- Package Configuration File and Control Script

- Creating Serviceguard Package Using Modular Method

- Applying the Configuration and Running the Package

- Database Maintenance

- Guidelines to Start Using MySQL Toolkit

- 6 Using an Apache Toolkit in a HP Serviceguard Cluster

- 7 Using Tomcat Toolkit in a HP Serviceguard Cluster

- Tomcat Package Configuration Overview

- Multiple Tomcat Instances Configuration

- Configuring the Tomcat Server with Serviceguard

- Setting up the Package

- Creating Serviceguard Package Using Modular Method

- Setting up the Toolkit

- Error Handling

- Tomcat Server Maintenance

- Configuring Apache Web Server with Tomcat in a Single Package

- 8 Using SAMBA Toolkit in a Serviceguard Cluster

- 9 Using HP Serviceguard Toolkit for EnterpriseDB PPAS in an HP Serviceguard Cluster

- 10 Support and Other resources

- 11 Acronyms and Abbreviations

- Index

Storage considerations

Unless otherwise stated, this toolkit supports all the file systems, storage, and volume managers

that Serviceguard supports, including CFS.

Supported configuration

Providing high availability to the EnterpriseDB PPAS instance

This configuration provides an automatic failover of the EnterpriseDB PPAS toolkit package to the

adoptive node.

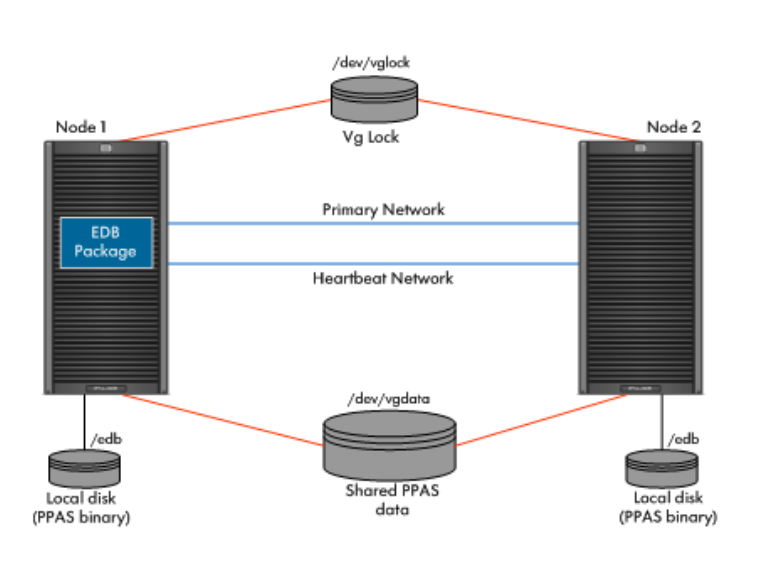

Figure 8 High availability to the EnterpriseDB PPAS instance

In Figure 8, the EDB is configured in a volume group shared between Node1, and Node2. EDB

is packaged using the HP Serviceguard Toolkit for EnterpriseDB PPAS. The primary package is

configured to run either on Node1 or on Node2, and is currently running on Node1. If the primary

package fails on Node1, it fails over to Node2. vglock is used as the cluster lock volume group,

and vgdata is used to keep the shared data for EDB. This vg is configured on the shared disks,

which can be accessed by both Node1 and Node2. The EDB binaries are placed on the local file

system of Node1 and Node2.

When an EDB instance, running on the primary node fails, or when the primary node, hosting the

EDB instance crashes, the EDB package fails over to the adoptive node without any user intervention.

The shared data required for EDB is placed on the shared storage, therefore, the EDB instance

running on the other node must access the same data that was being accessed by the primary

node.

The advantage of placing the EDB binaries on the local disk is that, when the EDB instance or the

node hosting the EDB instance fails, the adoptive node has its own EDB binary which is required

to start the EDB instance on the adoptive node.

Supported configuration 137