Open Source Object Storage for Unstructured Data: Ceph on HP ProLiant SL4540 Gen8 Servers

Table Of Contents

- Executive summary

- Introduction

- Overview

- Solution components

- Workload testing

- Configuration guidance

- Bill of materials

- Summary

- Appendix A: Sample Reference Ceph Configuration File

- Appendix B: Sample Reference Pool Configuration

- Appendix C: Syntactical Conventions for command samples

- Appendix D: Server Preparation

- Appendix E: Cluster Installation

- Naming Conventions

- Ceph Deploy Setup

- Ceph Node Setup

- Create a Cluster

- Add Object Gateways

- Apache/FastCGI W/100-Continue

- Configure Apache/FastCGI

- Enable SSL

- Install Ceph Object Gateway

- Add gateway configuration to Ceph

- Redeploy Ceph Configuration

- Create Data Directory

- Create Gateway Configuration

- Enable the Configuration

- Add Ceph Object Gateway Script

- Generate Keyring and Key for the Gateway

- Restart Services and Start the Gateway

- Create a Gateway User

- Appendix F: Newer Ceph Features

- Appendix G: Helpful Commands

- Appendix H: Workload Tool Detail

- Glossary

- For more information

Reference Architecture| Ceph on HP ProLiant SL4540 Gen8 Servers

idea exchange. Inktank is the company delivering Ceph, and they have a goal to drive the widespread adoption of SDS with

Ceph and help customers scale storage to the Exabyte level and beyond in a cost-effective way.

Enterprise solutions and support

While Ceph is in use for a variety of business cases, there’s ongoing work to support the needs of enterprise deployments

beyond just hardening work. If the business requires it, Inktank provides professional solution support for the cluster and

professional services such as performance tuning to maximize use of cluster resources.

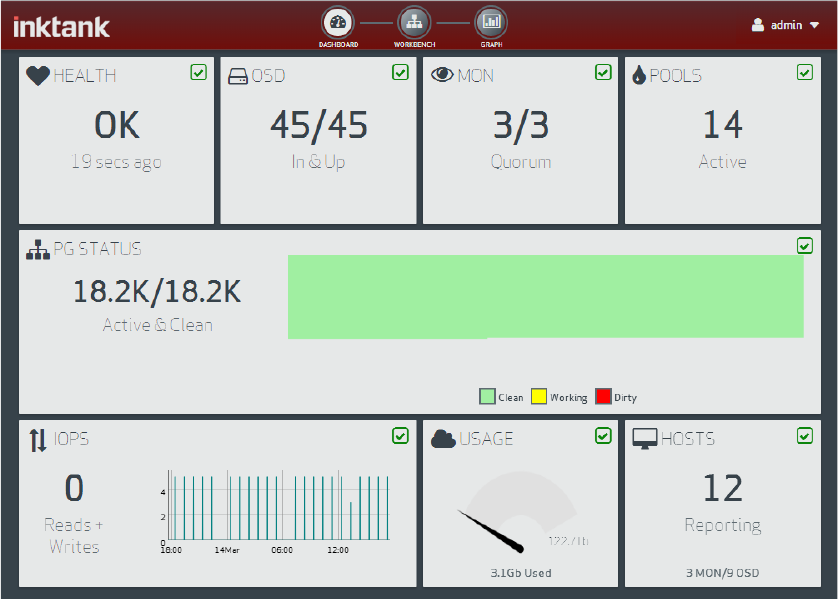

Inktank is also creating robust enterprise management software for Ceph. The graphical manager, named Calamari,

accelerates and simplifies cluster management by showing the performance and state data needed to operate a Ceph

cluster. Calamari already includes cluster management but will add support for analytics within 2014. Additionally, Ceph will

add support for SNMP and hypervisors like Microsoft Hyper-V and VMware to allow better integration of a Ceph cluster into

the data center cloud environment.

Figure 5: Calamari v1.1 Screenshot

Use storage that matches the needs of data

Ceph’s cluster reliability allows utilizing non-enterprise-class drives for significant scale savings. If faster storage is needed,

Ceph can be configured to restrict a pool to a more performant tier—particularly useful for RADOS Block Devices (RBD). With

replication, data consistency, and the cluster reliability of a properly tuned CRUSH map, Ceph provides enterprise data

availability and durability required at petabyte scales and beyond.

Flexible access methods

Ceph can provide many different methods of storage access within a single storage cluster; this whitepaper covers object

gateway and block access but file and native RADOS methods are also available.

For any storage access, customers generally want methods that are supported across many storage systems. To this end,

the object gateway converts S3 and Swift compatible APIs to RADOS objects. This allows existing libraries or applications

that use these APIs to be leveraged rather than rewritten. So use cases like hybrid public/private cloud setups, S3

repatriation, or heterogeneous object solution environments can share and reuse more code.

Ceph also can present cluster storage with a block interface that’s been supported in the Linux kernel since 2.6.35.

Traditional block-focused applications and standard OS file systems can then leverage cluster storage. Within a cloud

environment, RBD integrates with OpenStack Cinder/Glance and can be directly used by Linux VMs themselves.

Although CephFS has had less development focus (current plans are to release updated support towards the latter half of

2014), it’s still viable and in use for scale-out file system data cases today. It also provides HDFS offload, leveraging

replication’s data locality along with overall cluster management.

12